If you want to directly jump to code? go here

Once I was went through the core ideas from the “Attention Is All You Need” paper ( my notes) and also deeply learning it, I understood all the variations of how a transformer could be used, the encoder-decoder way for seq2seq tasks , the decoder-only autoregressive way for Language Modelling and a few other unique one’s.

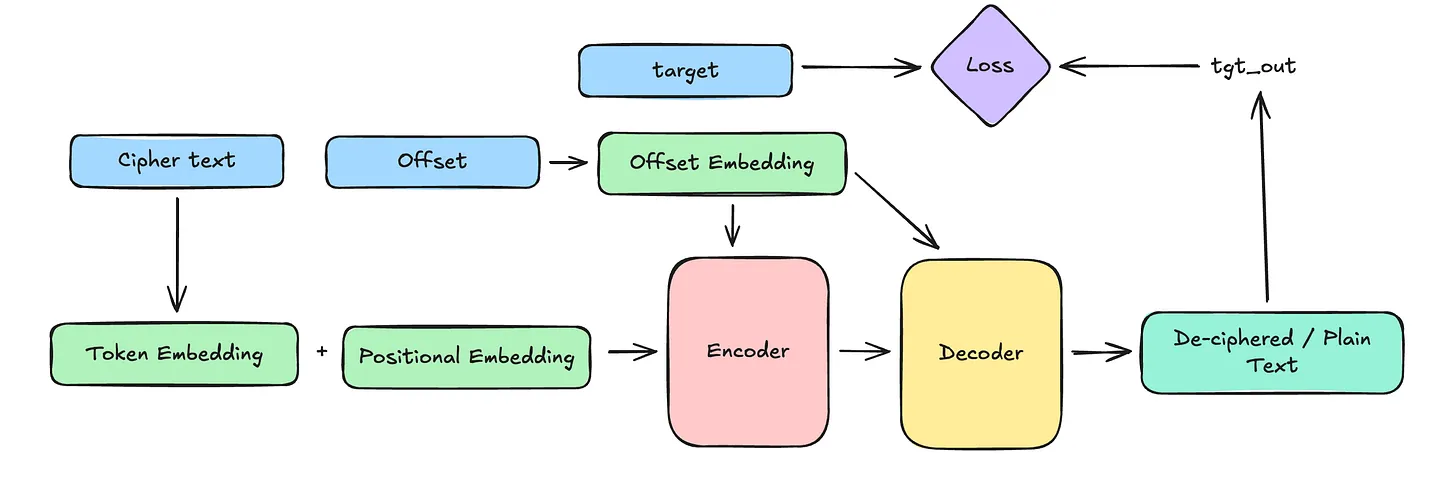

I first wanted to test out the seq2seq apprach, to understand it by-code and not just by building a model but also coming up with my own simple dataset. I had seen numerous examples of the encoder <> decoder stack being used for translation tasks, and it occurred to me that i could try “deciphering” which was a very very similar task and i had a “difficulty knob” with the dataset which would let me start off super simple and then ramp up the complexity.

Hence the Simple Cipher Dataset was born! Pythonista7/dataset_simple_ciphers

Then came the process of actually coding up, training , debugging and eval’ing my own model! LOT’S of brutal lessons were learnt in the process which i try to capture in this blog.

Nevertheless, after a fruitful period of suffering I was able to accomplish this task! You can find the complete process of from data to evals in this notebook

Here’s a quick overview of what’s actually in here: